PPM-Level Power Supply Long-Term Drift Prediction Algorithm

The deployment of ultra-precision power supplies in metrology, advanced sensor biasing, and fundamental physics experiments has elevated the importance of long-term stability from a desirable feature to a critical system parameter. While short-term noise and temperature coefficients are well-characterized, long-term drift—changes in output voltage or current over weeks, months, or years—remains a complex, stochastic phenomenon influenced by material aging, component stress relaxation, and cumulative environmental exposure. For applications requiring parts-per-million (PPM) accuracy over extended periods, such as maintaining the calibration of secondary standards or powering superconducting quantum interference device (SQUID) arrays, merely measuring drift is insufficient. Proactively predicting it allows for pre-emptive compensation, extended calibration intervals, and higher data integrity. The development of algorithms for long-term drift prediction represents a fusion of empirical data analysis, material science modeling, and machine learning.

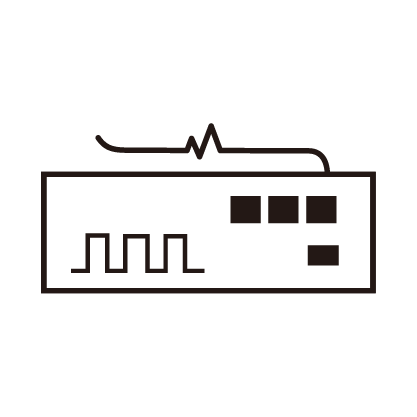

The foundation of any prediction algorithm is data. Modern precision power supplies are equipped with high-resolution internal monitoring analog-to-digital converters (ADCs) that continuously log not only the output voltage and current but also a suite of internal health and environmental parameters. This includes the temperature of the voltage reference chip, the main amplifier, and key resistors; the input line voltage; the output load current; and the elapsed operational time (hour meter). This multivariate time-series data forms the training set. The core physical insight is that long-term drift is not truly random; it is the result of underlying physical processes, such as the diffusion of contaminants in Zener diode references, the mechanical stress relaxation in wire-wound resistors, or the slow charging/discharging of dielectric absorption in capacitors. These processes have time constants ranging from days to years and are often accelerated by temperature (governed by the Arrhenius equation) and electrical stress.

A basic predictive model might employ a physics-informed approach. For example, the drift of a buried Zener voltage reference is known to follow a logarithmic trend over time after an initial burn-in period. The algorithm would fit the historical drift data to a function like ΔV = A * log(t) + B, where 't' is the operational time, and A and B are coefficients derived from the specific unit's history and its operating temperature. Temperature acceleration is incorporated using an empirically derived activation energy. This model can then extrapolate forward to predict the drift at a future date, providing an uncertainty bound based on the fit's residuals.

More sophisticated algorithms utilize machine learning techniques, particularly Long Short-Term Memory (LSTM) recurrent neural networks or transformer models. These are trained on aggregated, anonymized data from a fleet of power supplies of the same design deployed in various conditions. The network learns the complex, non-linear relationships between the input features—time, temperature history, load cycling, on/off cycles—and the resulting drift. It can identify subtle patterns, such as how a specific pattern of daily thermal cycling correlates with a particular drift signature months later. Once trained, the model is deployed on the edge, within the power supply's microcontroller, or in a connected cloud service. It ingests the real-time telemetry from the unit and periodically outputs a prediction of the expected drift deviation from the initial calibrated value over the next 30, 90, or 180 days.

The practical implementation of this prediction creates powerful new capabilities. First, it enables predictive calibration. Instead of a fixed, calendar-based recall interval, the system can schedule maintenance when the predicted drift, plus its uncertainty, approaches the acceptable tolerance limit. This maximizes the time between costly calibrations. Second, it allows for software compensation. In a closed-loop system, if the algorithm predicts with high confidence that the output will be 2.1 ppm low in 10 days, the control system can apply a +2.1 ppm digital offset to the setpoint today, effectively nullifying the future error. This active correction can extend the functional calibration period almost indefinitely for some applications. Third, it provides health prognostics. A significant deviation of the actual drift from the predicted path is a strong indicator of an impending component failure, such as a capacitor drying out or a resistor going unstable, triggering an early warning.

The development of such algorithms requires meticulous attention to data quality, feature engineering, and model validation. The predictions must be conservative, with well-quantified confidence intervals, to avoid over-reliance in critical applications. As a result, the power supply evolves from a static component into a self-aware, prognostic entity. Its value shifts from merely providing a stable voltage to guaranteeing the traceability and predictability of that stability over its entire operational life, a cornerstone for the next generation of long-duration, unattended scientific experiments and calibration laboratories.